Latest version: 4.3.x

Kafka Consumer monitoring

Lenses continuously monitors all Kafka consumers in a Kafka cluster. It uses the native Kafka client to calculate in real-time metrics around the Lag per partition (the number of messages that have not been consumed yet).

Consumer Groups represent Kafka applications that consume data from one or more Kafka topics. The consumers typically commit their offsets to a system topic called

__consumer_offsetsto persist their state (until which point they have consumed).

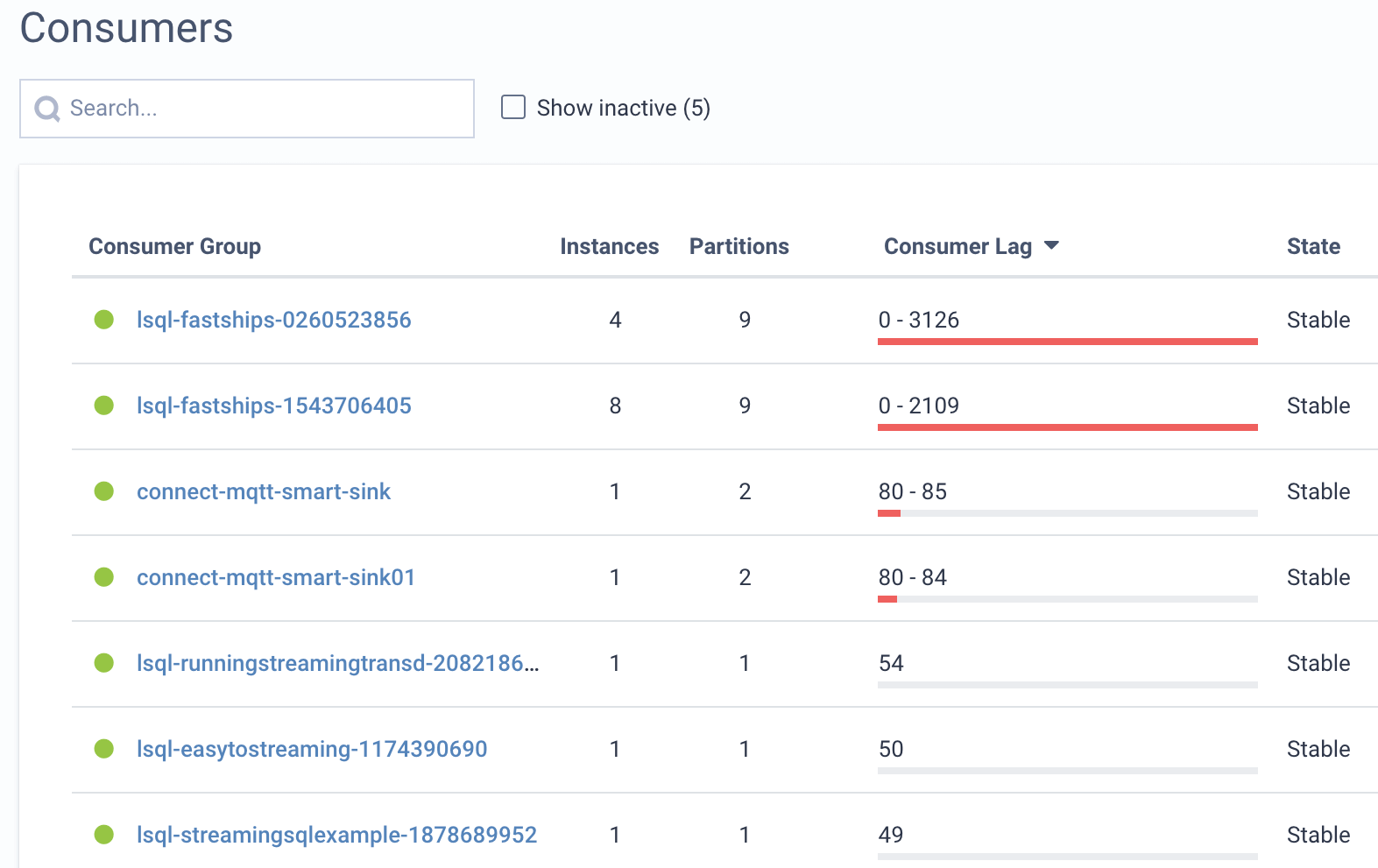

Monitor Kafka consumers

- From the Header Bar Menu, go to the Dashboard panel.

- On the side navigation, select Consumers under the Monitor section.

The red bar under the consumer lag column, indicates the lag range (minimum and maximum lag per partition). When

a single partition is involved the lag is a single number.

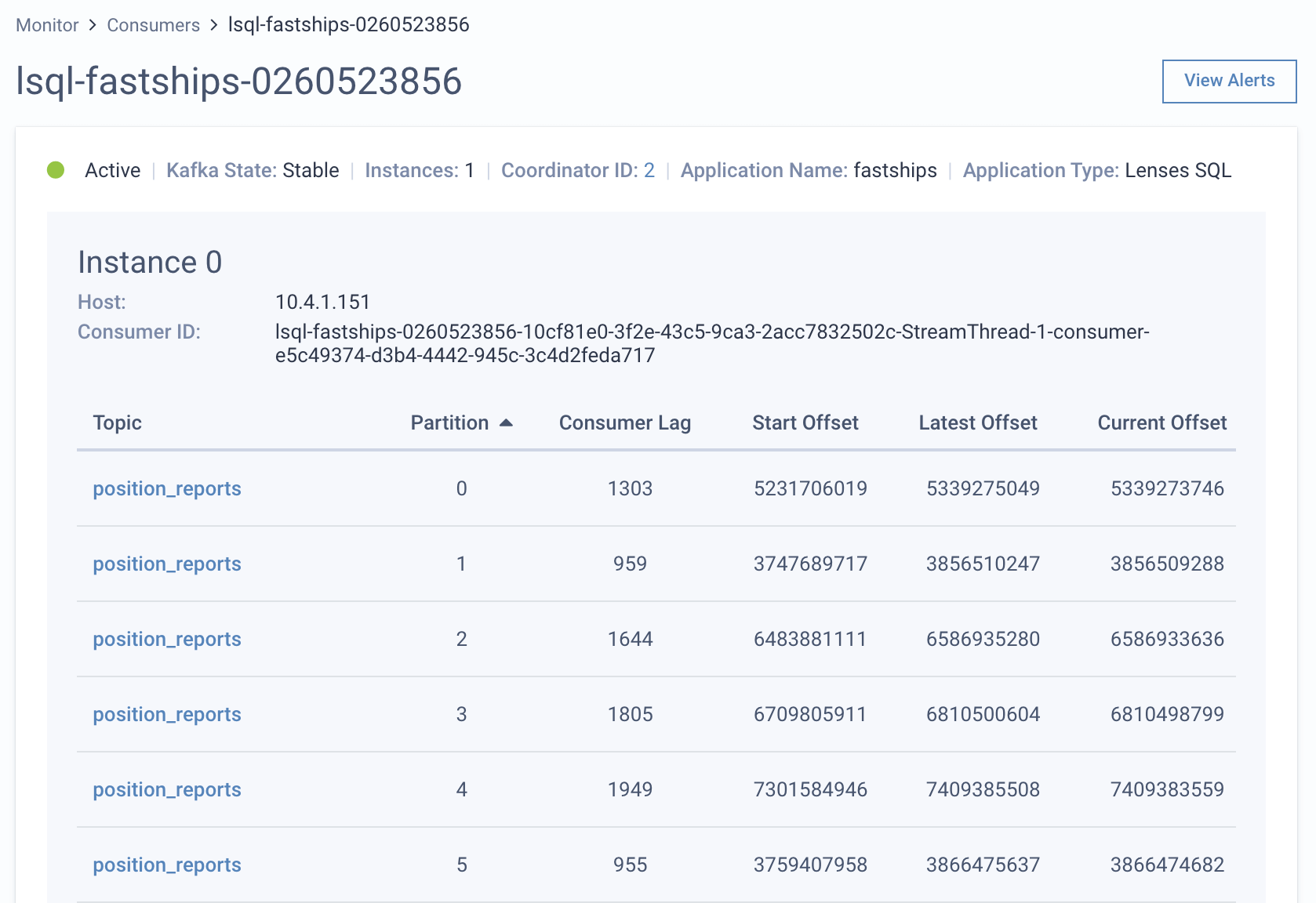

Kafka Consumer details

You can look into the details of each Kafka consumer group by clicking on it.

From the detail page, you can see how the topic partitions have been distributed to each consumer instance as well as the lag for each topic-partition.

Coordinator IDis the Kafka broker who acts as the coordinator for this consumer groupLatest Offsetis the latest offset of a partitionCurrent Offsetis the current offset until which the consumer has read the message

Consumer Groups may consume from multiple topics at the same time and the load is automatically distributed across each application instance in the group. Both applications, Kafka Connectors and Streaming SQL have a consumer group when they are consuming events from Kafka.

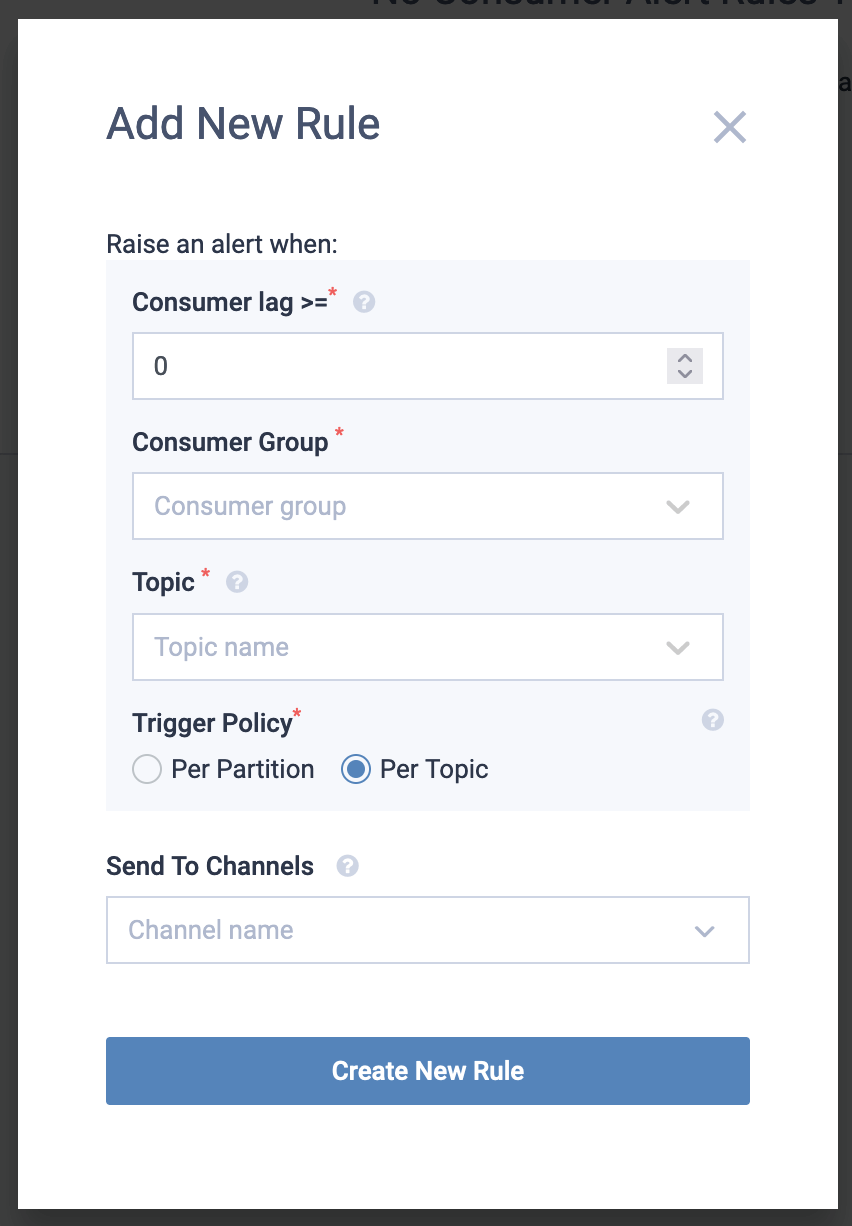

Kafka Consumer alerts

Alert Rules can be set to monitor consumer lag:

- Click on the View Alerts button to see existing rules set for this consumer.

- You can also navigate via Admin > Alert Rules > Custom Rules.

- Click on the “New Rule” button and fill in the details.

The alert will be triggered when the consumer lag exceeds the desired value. The are 2 different modes to trigger an alert:

Per partition: A distinct event will be triggered for each partition that exceeds the lag threshold.

Per topic: An event will be triggered when any of the topic’s partitions exceed the lag threshold and and it will not be cleared until every partition lag is below the given threshold.

Note that the Trigger Policy is set to Per Topic by default.

Examples:

- Consumer lag: 1000

- Consumer group: test-group

- Topic: test-topic

- Trigger Policy: Per partition

Alert Events

| ID | Summary | Category | Level | Timestamp |

|---|---|---|---|---|

| 2000 | Consumer group ’test-group’ is now below the threshold=1000 on topic ’test-topic’ and partition ‘3’. | Consumers | INFO | 1 day(s) ago |

| 2000 | Consumer group ’test-group’ is now below the threshold=1000 on topic ’test-topic’ and partition ‘1’. | Consumers | INFO | 2 day(s) ago |

| 2000 | Consumer group ’test-group’ has a lag of 2000 (threshold=1000) on topic ’test-topic’ and partition ‘3’. | Consumers | HIGH | 3 day(s) ago |

| 2000 | Consumer group ’test-group’ has a lag of 2000 (threshold=1000) on topic ’test-topic’ and partition ‘1’. | Consumers | HIGH | 3 day(s) ago |

- Consumer lag: 1000

- Consumer group: test-group

- Topic: test-topic

- Trigger Policy: Per topic

Alert Events

| ID | Summary | Category | Level | Timestamp |

|---|---|---|---|---|

| 2000 | Consumer group ’test-group’ lag is now below the threshold (1000) on topic ’test-topic’ for all the partitions. | Consumers | INFO | 2 day(s) ago |

| 2000 | Consumer group ’test-group’ has a max lag of 2000 (threshold=1000) on topic ’test-topic’ on at least one partition. | Consumers | HIGH | 3 day(s) ago |

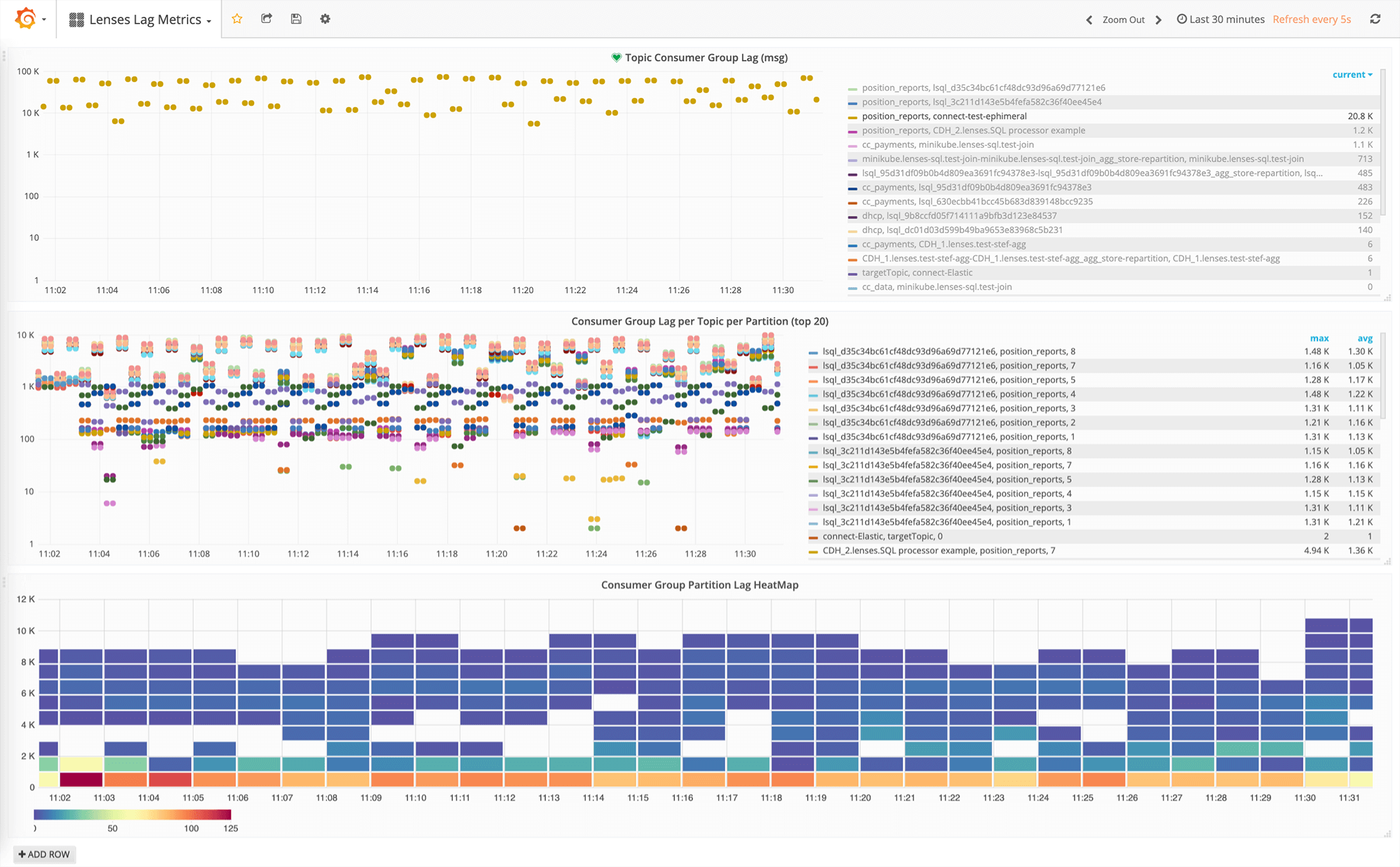

Consumer lag prometheus

If you want to track in a time-series system, such as Prometheus / Grafana the historic values of the Consumer Lag, just add Lenses as a target. Lenses calculates and makes available consumer lag info in a prometheus compatible format under the Lenses API:

<your-lenses-host>/metrics

You can then hook this to your Grafana:

Kafka consumer permissions

You can use RBAC (Role Based Access) to control users that have visibility and management roles for Kafka Consumers. In addition Data Centric Security and namespaces can further refine the level of access. See more on Application Permissions

-

The permission needed to create and edit a consumer alert rule is

ManageAlertRules -

ViewKafkaConsumersandViewTopicpermissions under the namespace are also suggested, as they are required to see the list of available consumer groups